Convolution published: 25th March 2025

Intro

Let's talk systems. You hear people talk about systems and how complex

some systems are. Systems surround us in every aspect if life. Think

about economy, it consists of entities interacting with each other and

producing some output i.e. economic activity which affects all our

lives. It consists of consumers, businesses, governments, and

resources interacting through countless transactions. A car is a

system which consists of smaller systems interacting with each other

and producing not just one but multiple outputs. It integrates

mechanical, electrical, and computational subsystems that collectively

provide transportation. For example when you press the car pedal these

interacting smaller systems produce velocity which moves the car. In

terms of audio processing a filter is a system which takes in an input

and filters out a part of that given output. You'd not want your

filter to be unstable and modify the input signal in an inconsistent

manner. So how do you make sure when designing such systems that they

produce the same output given the same input now or later. The

challenge across all these domains is ensuring consistency and

predictability that a system will behave the same way given the same

inputs regardless of when or how it's operated.

A system can be defined as a set of

interconnected components that work together to achieve specific

outcomes. In terms of DSP a system can be defined as any process that

produces an output signal in response to an input signal. A

signal describes how one parameter varies

with another parameter. For example an analog signal in DSP is voltage

changing over time. Systems and signals can be discrete or continuous.

Mathematically continuous signals use parentheses: x(t), y(t) while

discrete signals use brackets: x[t], y[t]. Also, signals use lowercase

letters. The name given to a signal usually describes the parameters

of the signal, eg. v(t) is voltage over time.

System Properties

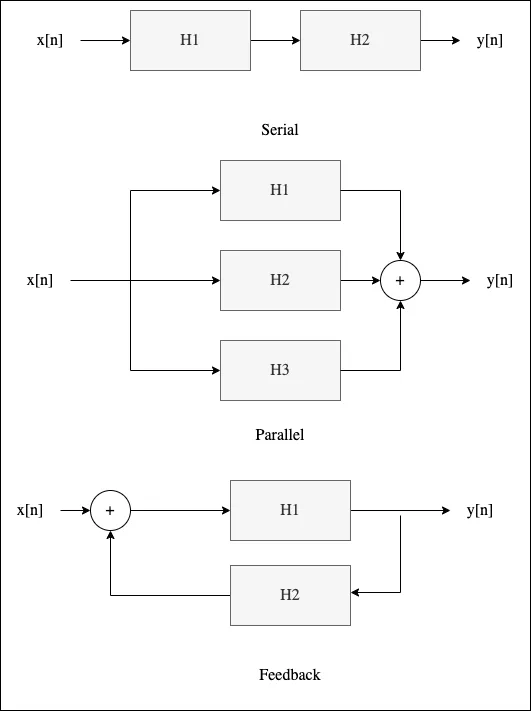

Let's take the car pedal as an example. The pressure you apply to the pedal translates to the velocity produced by the system. So, p(t) -> system -> v(t) i.e. velocity. Or let's take a different kind of system as example which is outside the engineering domain such as price of petrol on a day. The price of a barrel of oil which goes into a system like economy and what you get is the price of a litre of petrol. o[n] -> system -> p[n]. Mathematically a system is denoted by H so p[n] = H(o[n]). In real life there is seldom a simple system consisting of just one unit, usually multiple subsystems are connected to each other in serial, parallel feedback fashion. A cruise control in cars is a good example of a feedback system.

Such systems have certain properties that we need to understand in

order to study them and first one of the properties is

causality. A system is causal if the

output at time n only depends on the input up to time n.

y[n] = x[n] - 3x[n-1]

This system seems causal and to check if it causal or not we can check

if the output depends on a future value. For eg.

y[n] = x[n+2] is not causal as it depends on a future value that we

don't have yet.

This one sounds to be pretty obvious. Your car doesn't know how you're

going to interact with it. The car will only react when you interact

with it making it a causal system. But we also see many non-causal

systems in real life. Systems in pattern recognition, image

processing, etc. are non-causal. If you want to blur a part of the

image you take all the surrounding pixel and average it out to

pixelate that part. If this was causal you'd only be allowed to access

pixels up to that part of the image and not the later ones for

averaging it out. A system being causal or not depends entirely on the

type of signal it's processing.

Studying systems is very crucial for DSP. For example, when you speak

on a phone you want your voice to resemble you on the other side. But

the input on the transmission line is different from the output on the

other side so in this case if you understand how the transmission line

(the system) works you can compensate for the unwanted effects on the

output signal. But where do we start this study? There are so many

systems out there in the world so it can take a lot of effort studying

each and every system. Well luckily given we are bound by physical

laws we can categorise such systems because of their intended

behaviour into something called as

linear systems which makes it easier

studying them. Linearity is the second property of systems. Let's

understand this property in detail.

Linearity

A system is linear if it satisfies these two mathematical properties:

homogeneity and

additivity. There is another property

called

shift invariance / time invariance which

is not a requirement for linearity but is a must for DSP domain. Let's

understand all 3 of these properties.

A system is homogeneous if change in the amplitude of the input

results in corresponding change in the amplitude of the output signal.

So if you multiply the input with a value to scale it the output

should also be scaled by that value. A resistor is a good example of a

homogeneous system. Any change in voltage v(t) across the resistor

will result in change of the current flowing through the resistor and

Ohm's law guarantees this. But if you think about it resistor is also

a non-homogeneous system if you look at the power dissipated by the

resistor. Since power is square of the voltage any change in the

voltage produces the power by a factor of 4 since p ∝

v2.

Let's say you have an input x1[n] which goes through a

system and gives y1[n]. Now you take another signal

x2[n] which gives an output of y2[n]. A system

is additive if the sum of the inputs produces sum of those individual

outputs. What this means is that the signals should pass through the

system without interacting. Think of this, when you're on a conference

call you can hear each person's voice as theirs if they are all

talking at the same time, you don't hear a new kind of voice which is

an amalgamation of the individual voices coming through the phone.

Both of the properties can be described in the following manner:

ax1[n] + bx2[n] -> system -> ay1[n] +

by2[n]

for any input x1, x2.

Shift or time invariance says the system behaves the same way

regardless of when the input is applied. A shift in the input signal

will result in the an identical shift in the output signal. If we add

a delay the signal by some value the output should also be delayed by

the same value.

x[n-n0] -> system -> y[n-n0]

Not all systems are time invariant. For example, the speed to download

a file at 3am is not the same as speed of download at 3pm. Internet

traffic is non time invariant. So why is additivity and homogeneity a

requirement for linearity but not time invariance? This is because

linearity is pretty broad and sometimes does not involve a signal. For

example, consider a shopkeeper who sells oranges for $2 per crate and

mangoes for $5 per crate. If the shopkeeper only sells oranges they

will receive $10 for 5 creates and $20 for 10 crates which makes the

exchange homogeneous. If they sell 20 creates of oranges and 10

creates of mangoes they will get $2 x 20 + $5 x 10 = $90 which is the

same amount as if the two had been solved individually making the

transaction additive. The transaction being both additive and

homogeneous makes it a linear process but since there are no signals

time invariance need not be satisfied for the process to be linear.

Superposition of LTI systems

Real world systems are modeled as Linear Time Invariant systems as

LTIs are often a good approximation and analysis is easy and powerful

of the corresponding real life system. When dealing with linear

systems the only way the signals can be combined is by

scaling which is multiplying tje signal

with a constant followed by addition.

A signal can't be multiplied by another signal.

Combining the signal through scaling and adding is called

synthesis. The inverse operation is called

decomposition.

Consider a signal x[n] passing through a system which produces output

y[n]. Here the input signal can be composed into a group of simpler

signals x1[n], x2[n], x3[n], etc.

which is called as input signal components. Now each component is

passed through the system resulting in output signal components

y1[n], y2[n], y3[n], etc. These

output signals can be synthesised to form the final output y[n]. This

is where things get interesting: the outout signal obtained by this

method is identical to the one produced by passing the inout signal

directly into the system. So instead of trying to understand how a

system behaves when given a complicated signal instead what we can do

is understand how it behaves if the signal is simplified into its

individual components. Here the input and output signals are

superposition i.e. sum of simpler signals. There are multiple ways to

achieve this understanding of a system by passing it a simple signal

and one of them is called impulse decomposition.

Impulse Decomposition

What you do in impulse decomposition is decompose the input signal into impulses. Ok, what are these impulses? An impulse is a signal composed of all zeros except a single non-zero point. Let's look at an example. If we have a 4 sample signal x[n] = {5, 3, 8, 2} the impulse decomposition would create 4 component signals:

- Component 1: {5, 0, 0, 0}

- Component 2: {0, 3, 0, 0}

- Component 3: {0, 0, 8, 0}

- Component 3: {0, 0, 0, 2}

Now you can pass these individual components to the system to

understand how the system behaves for one impulse and the

corresponding output produced by the system is called an impulse

response. Mathematically we use something called as a delta function

to make these signal components. It is denoted by δ[n] and is a

normalised impulse i.e. sample number zero has value of one and all

other samples have a value of zero. It is sometimes also referred to

as unit impulse since the sample at zero is one. When we say a

system's impulse response (denoted by) is h[n], we're saying "this is

how the system responds when the input is δ[n]." This gives us a

standard reference point for comparing systems. A decomposed signal is

just a scaled and shifted delta function. Refer to the above example.

Component 1 which is 5 is a delta function scaled to 5 and shifted by

zero. Component 2 is delta function scaled to 3 and shifted by one.

This can be represented mathematically as:

x1[n] = 5 * δ[n-0] = {5, 0, 0, 0}

x2[n] = 3 * δ[n-1] = {0, 3, 0, 0}

x[n] = x[0]·δ[n] + x[1]·δ[n-1] + x[2]·δ[n-2] + ... +

x[N-1]·δ[n-(N-1)]

This is written mathematically as

where:

- x[n] is the complete discrete signal

- x[k] is the amplitude of the kth sample

- δ[n-k] is the unit impulse (delta) function shifted by k positions

- N is the total number of samples in the signal

Convolution

Let's summarise what we have discovered so far. First, the input

signal is decomposed into a set of impulses which are nothing but

scaled and shifted delta functions. Second, each of these scaled and

shifted impulses are put through the system which results in a

corresponding scaled and shifted version of the impulse response.

Third, the overall output can be found by adding these scaled and

shifted impulse responses. This means we know everything about the

system.

Ok but what exactly is this system and what are we trying to achieve

with this system? In the context of audio processing a system refers

to any process that transforms an input audio signal into an output

signal so these modules need to take in audio signals and do some

processing to give us the desired output. A filter selectively

attenuates or boosts certain frequencies, reverb simulates an acoustic

environment, etc. These modules are the LTI systems we have been

talking about so far. While designing such modules we need to adhere

to the LTI principles else we won't be able to achieve the desired

results. The power of LTI systems lie in their predictability and

mathematical elegance. We can fully understand the behaviour of an LTI

system through its impulse response which allows us to apply that same

transformation consistently to any input signal through convolution.

This approach is the foundation of audio effects from reverbs to

equalisers. Without the framework of LTI the elegant simplicity of

convolution would fail to produce consistent, predictable results

audio engineers and musicians rely on.

You cannot combine two signals by adding or multiplying them. I mean

yes you can add or multiply signals together but the output you get

from such an operation might not be what you are looking for. For eg.

consider an audio processor module which provides a reverb effect to

the input signal. If you just add the input signals with the reverb

module's impulse response you might just get a louder signal at the

output. Or, if you just multiply the input signal with the module's

impulse response you might change the amplitude of the signal but it

won't have the reverb echoes. To actually create the reverb effect

with its characteristic echoes and decay, you need to convolve the

input signal with the impulse response and that's where convolution

comes in. Convolution is a mathematical operation just like

multiplication and addition. Convolution takes two signals and

produces a third signal but the process works completely different

than let's say the addition of two numbers.